Integrating tsqlt with Team Foundation Build Services continuous build

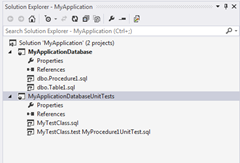

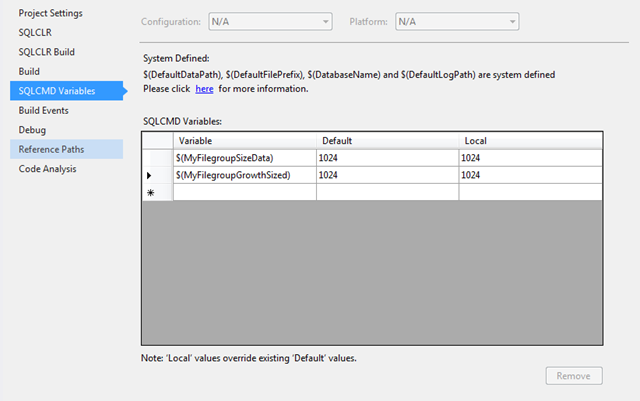

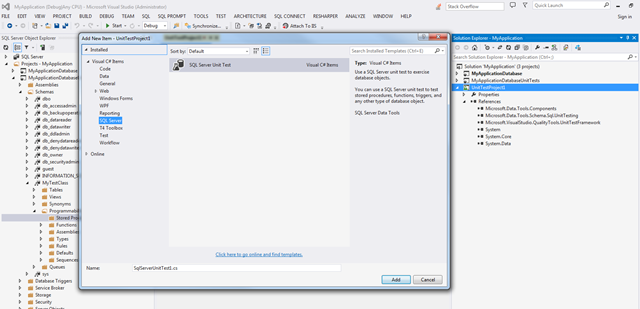

As I've mentioned in a previous blog post I have been integrating a SQL Server unit test framework: tsqlt into my project life-cycle ( click here to read more ). Within this post I will share how I got my tsqlt unit tests executing within the continuous integration solution and the results influencing the outcome of the build. The continuous integration platform I am using is Team Foundation Build Services 2012 (TFBS). However my approach will work with any continuous integration platform which handles MS-Build and MS-Test, like Team City. Build Integration As I had created my database unit tests within SQL Server Data Tools (SSDTs) integrating them into the continuous integration was a straightforward process. By extending the build definition to include the unit test project into the list of solution / projects which have to be built. As I had my own solution, which was listed within the build definition, for all the database projects and other related projects my unit tes