Source system data to warehouse via CDC.

Introduction

I have been working on a data warehouse project with a difference. The difference is that the ETL is not doing the classic extract of source system data, instead the source system is going to send its data to the data warehouse by using change data capture (CDC). The decision for using CDC was as follows:- To have the ETL only process the data that has changed within the source system between each run of the ETL. This would help the ETL perform as it would only have to process the change data and not work out what the changes were first.

- Not to have the ETL processes impact the central source system database. The CDC would be responsible for delivering the changes of the source system to another database server. Then the ETL could be run at any time within the day and wouldn't be responsible for blocking the transactional source system. As there was plans to have the ETL run at the end of the day for two time zones e.g. US and UK.

Development

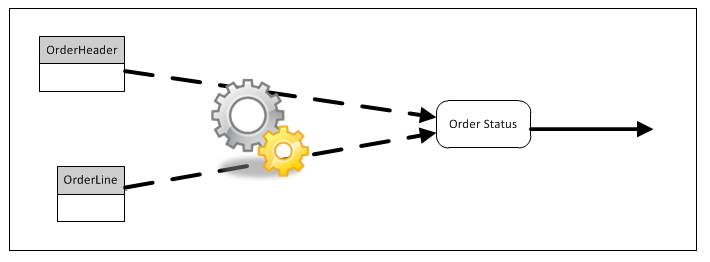

Early into development we discovered that we had to define what was meant by processing intraday changes. Did this mean every row which was captured by CDC within that Day? In other words, that every change which was captured should create a history change within the data warehouse leaving the last change as current. Or did this mean capture events changes within the day? For example scanned the changes for that day and find the flags for the records that are required. For us this meant capture event changes. Importance of understanding the meaning of intraday changes had an impact on how we approached coding the transform procedures.Another challenge that we faced was how to deal with transforms which require data from two tables. The reason why this was a challenge is because of the following scenario: An entity within the warehouse has a business rule which requires data from 2 tables from the source system before it can be evaluated. However within the source system data has been changed and captured for one table only. For example: business rule order status requires data from the OrderHeader table and the OrderLine table. As illustrated below.

The source system updates the OrderLine table and a CDC captures the changes and sends them to the ETL. Then the ETL process runs with only the OrderLine changes tries to evaluate the order status business rule but how to evaluate this when OrderHeader data has not been supplied? As illustrated below.

There are many ways this can be resolved, here are some examples:

- Change the application process to create updates to the required linked tables. This might not solve the problem as the CDC software may not have committed all of the changes before the ETL process starts running, unless the CDC is part of the ETL. Also this may cause performance problems for the application team.

- To design the data warehouse scheme and business rules so that the transforms don't require joins between tables to create a data warehouse. This I will highlight may not be practical as it could mean it basically becomes a copy of source systems schemas which would give you no value for reporting and analysing.

- To get transform to read the missing data from the data warehouse if CDC hasn’t passed on the data. This was a good option to consider however there are few considerations to be aware of:

- Could have performance issues with the transform for some of the following reason: Using left outer joins on warehouse tables and depending on the warehouse schema could require a large number of tables; have use of function calls to IsNull or Coalesce; The size of the tables data warehouse could make query tuning very difficult.

- Adding complexity to untranslate business rules or transforms if source system data isn’t being natively stored

- Harder to read and maintain as the transform isn’t just transforming source data

- The data warehouse could become even wide or larger if the source systems native data is stored within the warehouse to help the transform. This will have an impact for querying and processing performance; also add overhead cost for storage.

Comments